Introduction

If you've built AI agents before, you know the frustration: they work great in demos, then fall apart in production. The agent crashes on step 8 of 10, and you start over from scratch. The LLM decides to do something completely different today than yesterday. You can't figure out why the agent failed because state is hidden somewhere in conversation history.

I spent months wrestling with these problems before discovering LangGraph. Here's what I learned about building agents that actually work in production.

The Chain Problem: Why Your Agents Keep Breaking

Most developers start with chains—simple sequential workflows where each step runs in order. They look clean:

result = prompt_template | llm | output_parser | tool_executorresult = prompt_template | llm | output_parser | tool_executorBut chains have a fatal flaw: no conditional logic. Every step runs regardless of what happened before. If step 3 fails, you can't retry just that step. If validation fails, you can't loop back. If you need human approval, you're stuck.

Figure: Graph vs. Chains

Production systems need:

-

Conditional routing based on results

-

Retry logic for transient failures

-

Checkpointing to resume from crashes

-

Observable state you can inspect

-

Error handling that doesn't blow up your entire workflow

That's where graphs come in.

What LangGraph Actually Gives You

LangGraph isn't just "chains with extra steps." It's a fundamentally different approach built around five core concepts:

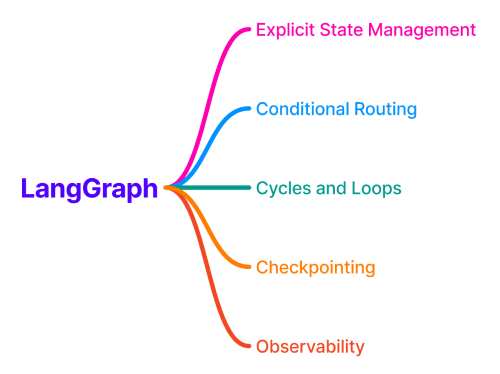

Figure: LangGraph Core Concepts

1. Explicit State Management

Instead of hiding state in conversation history, you define exactly what your agent tracks:

class AgentState(TypedDict): messages: Annotated[list[BaseMessage], add_messages] current_stage: str retry_count: int search_results: list[dict] status: strclass AgentState(TypedDict): messages: Annotated[list[BaseMessage], add_messages] current_stage: str retry_count: int search_results: list[dict] status: strNow you can inspect state at any point. Debug based on facts, not guesses.

2. Conditional Routing

The killer feature. Your agent can make decisions:

def route_next(state): if state["retry_count"] >= 3: return "fail" elif state["error"]: return "retry" else: return "continue"def route_next(state): if state["retry_count"] >= 3: return "fail" elif state["error"]: return "retry" else: return "continue"This simple function enables retry loops, error handling, and multi-stage workflows.

3. Checkpointing

Save state after every step. If execution crashes on step 8, resume from step 7:

checkpointer = SqliteSaver.from_conn_string("checkpoints.db")app = workflow.compile(checkpointer=checkpointer)<div></div># Crashes? Just resumeresult = app.invoke(None, config={"thread_id": "123"})checkpointer = SqliteSaver.from_conn_string("checkpoints.db")app = workflow.compile(checkpointer=checkpointer)# Crashes? Just resumeresult = app.invoke(None, config={"thread_id": "123"})4. Cycles and Loops

Unlike chains, graphs can loop back. Validation failed? Retry. Output quality low? Refine and try again.

5. Full Observability

Stream execution to see exactly what's happening:

for step in app.stream(state, config): print(f"Node: {step['node']}, Stage: {step['stage']}")for step in app.stream(state, config): print(f"Node: {step['node']}, Stage: {step['stage']}")No more black boxes.

Building a Real Agent: Research Agent Walkthrough

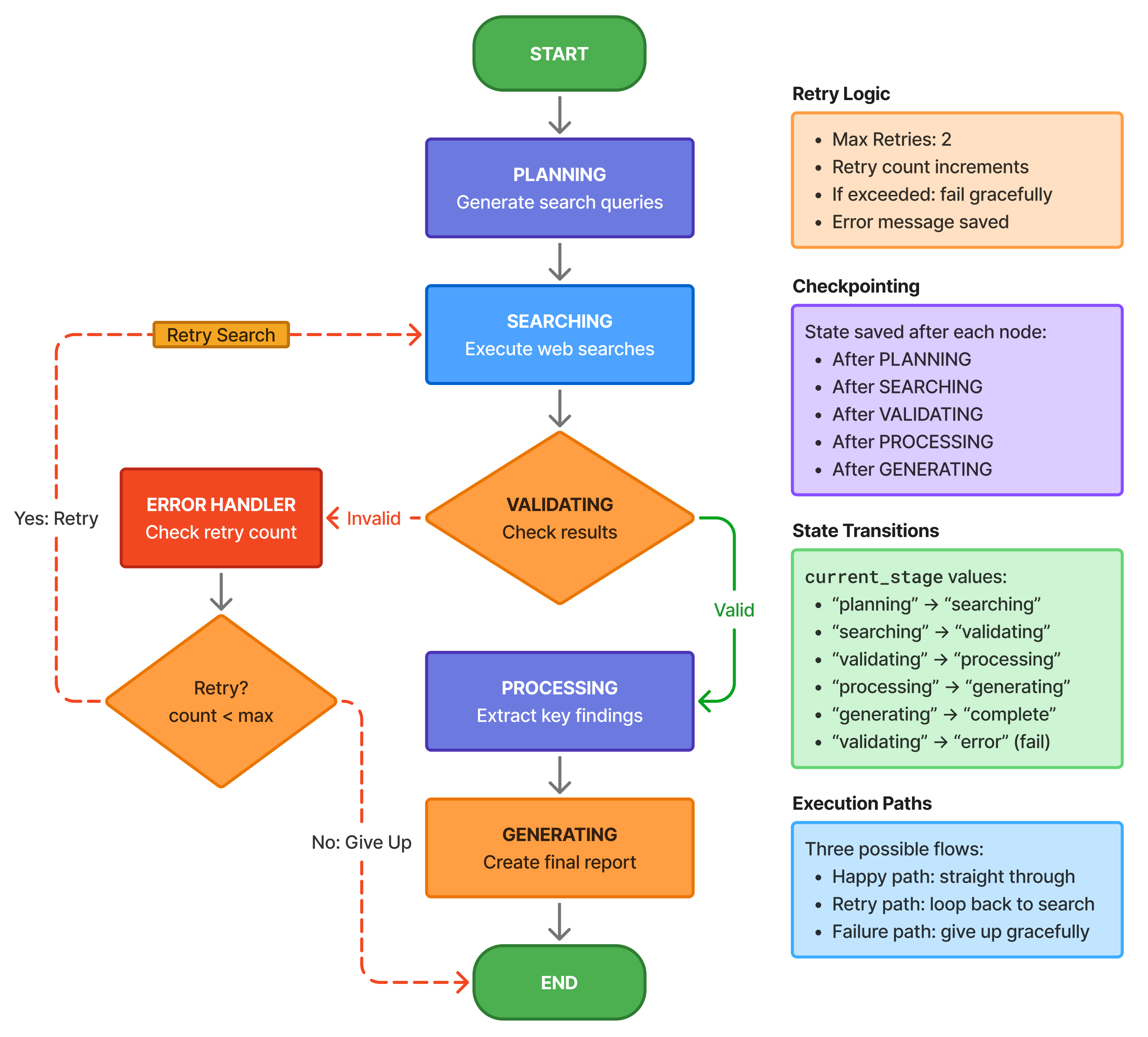

Let me show you how these concepts work in practice. We'll build a research agent that:

-

Plans search queries

-

Executes searches

-

Validates results (retries if insufficient)

-

Extracts key findings

-

Generates a final report

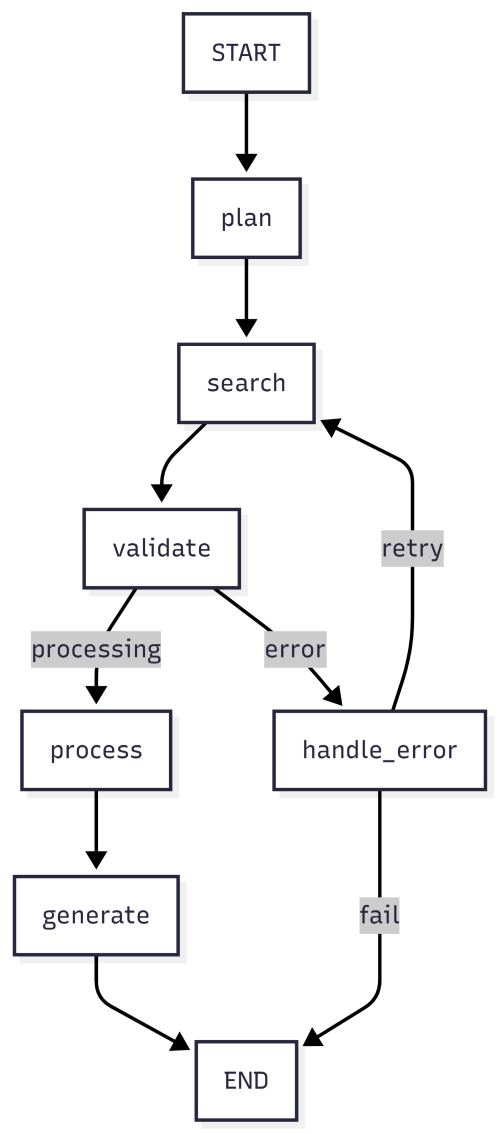

Here's the complete flow:

Figure: Research Agent Flow

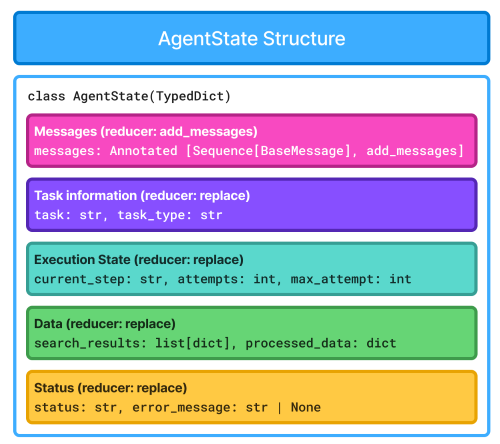

Step 1: Define Your State

State is your agent's memory. Everything it knows goes here:

class ResearchAgentState(TypedDict): # Conversation messages: Annotated[list[BaseMessage], add_messages] # Task research_query: str search_queries: list[str] # Results search_results: list[dict] key_findings: list[str] report: str # Control flow current_stage: Literal["planning", "searching", "validating", ...] retry_count: int max_retries: intclass ResearchAgentState(TypedDict): # Conversation messages: Annotated[list[BaseMessage], add_messages] # Task research_query: str search_queries: list[str] # Results search_results: list[dict] key_findings: list[str] report: str # Control flow current_stage: Literal["planning", "searching", "validating", ...] retry_count: int max_retries: int

Figure: Agent State Structure

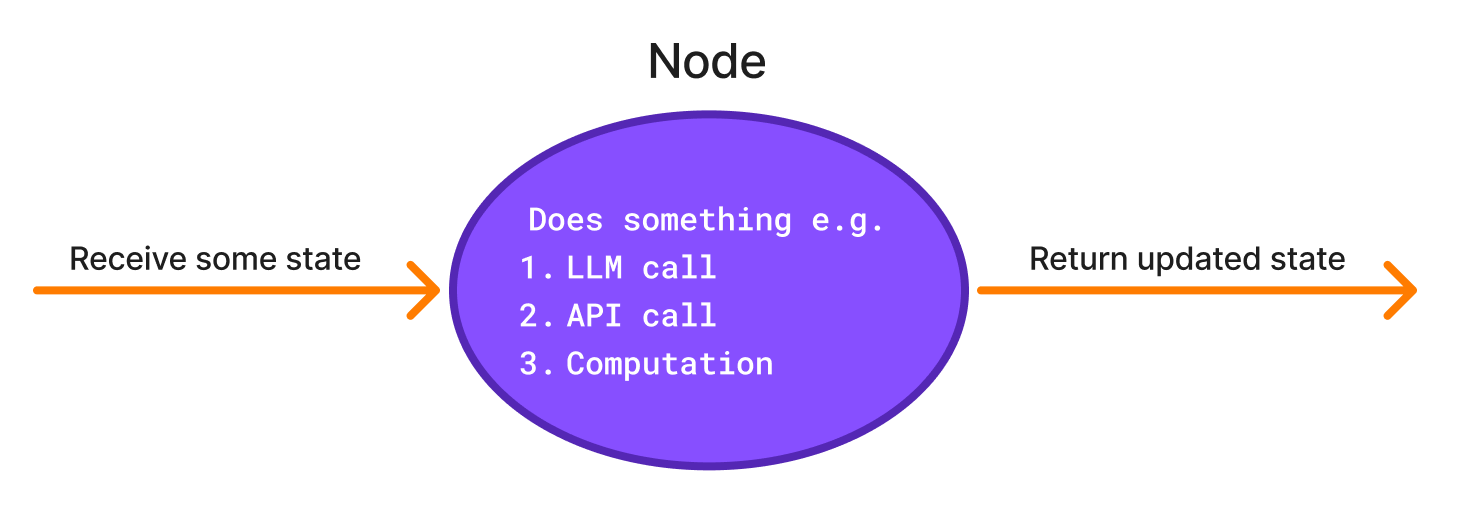

Step 2: Create Nodes

Nodes are functions that transform state. Each does one thing well:

def plan_research(state: ResearchAgentState) -> dict: """Generate search queries from research question.""" query = state["research_query"] response = llm.invoke([ SystemMessage(content="You are a research planner."), HumanMessage(content=f"Create 3-5 search queries for: {query}") ]) queries = parse_queries(response.content) return { "search_queries": queries, "current_stage": "searching" }def plan_research(state: ResearchAgentState) -> dict: """Generate search queries from research question.""" query = state["research_query"] response = llm.invoke([ SystemMessage(content="You are a research planner."), HumanMessage(content=f"Create 3-5 search queries for: {query}") ]) queries = parse_queries(response.content) return { "search_queries": queries, "current_stage": "searching" }

Figure: Node Anatomy

Step 3: Connect with Edges

Edges define flow. Static edges always go to the same node. Conditional edges make decisions:

# Always go from plan to searchworkflow.add_edge("plan", "search")<div></div># After validation, decide based on resultsdef route_validation(state): if state["current_stage"] == "processing": return "process" return "handle_error"<div></div>workflow.add_conditional_edges( "validate", route_validation, {"process": "process", "handle_error": "handle_error"})# Always go from plan to searchworkflow.add_edge("plan", "search")# After validation, decide based on resultsdef route_validation(state): if state["current_stage"] == "processing": return "process" return "handle_error"workflow.add_conditional_edges( "validate", route_validation, {"process": "process", "handle_error": "handle_error"})This pattern handles validation failures, retries, and graceful degradation.

Step 4: Add Checkpointing

Production agents need checkpointing. Period.

from langgraph.checkpoint.sqlite import SqliteSaver<div></div>checkpointer = SqliteSaver.from_conn_string("agent.db")app = workflow.compile(checkpointer=checkpointer)from langgraph.checkpoint.sqlite import SqliteSavercheckpointer = SqliteSaver.from_conn_string("agent.db")app = workflow.compile(checkpointer=checkpointer)Now state saves after every node. Crash recovery is automatic.

Step 5: Execute with Observability

Stream execution to see what's happening:

config = {"configurable": {"thread_id": "research-001"}}<div></div>for step in app.stream(initial_state, config=config): node_name = list(step.keys())[0] print(f"Executing: {node_name}") print(f"Stage: {step[node_name]['current_stage']}")config = {"configurable": {"thread_id": "research-001"}}for step in app.stream(initial_state, config=config): node_name = list(step.keys())[0] print(f"Executing: {node_name}") print(f"Stage: {step[node_name]['current_stage']}")Here's real output from a production run:

14:54:36 - Creating research agent14:57:30 - Planning: Generated 5 search queries 14:57:41 - Searching: 3/3 successful14:57:41 - Validating: 3 valid results15:03:26 - Processing: Extracted 5 key findings15:07:32 - Generating: Report complete14:54:36 - Creating research agent14:57:30 - Planning: Generated 5 search queries 14:57:41 - Searching: 3/3 successful14:57:41 - Validating: 3 valid results15:03:26 - Processing: Extracted 5 key findings15:07:32 - Generating: Report completeFull visibility into what happened, when, and why.

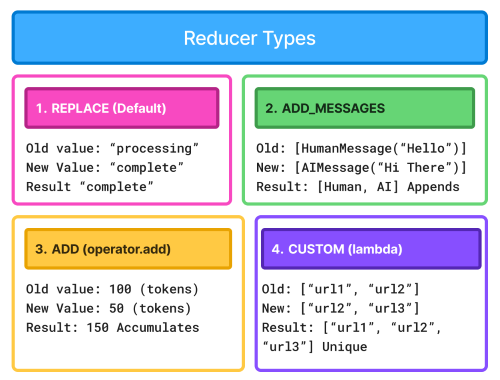

The Power of State Reducers

One subtle but critical concept: reducers. They control how state updates merge.

Figure: Reducer Types

Default behavior is replace: new value overwrites old. But for lists and counters, you need different logic:

# Replace (default)status: str # New status replaces old<div></div># Accumulate total_tokens: Annotated[int, add] # Adds to running total<div></div># Appendmessages: Annotated[list, add_messages] # Appends to history<div></div># Customurls: Annotated[list, lambda old, new: list(set(old + new))] # Dedupes# Replace (default)status: str # New status replaces old# Accumulate total_tokens: Annotated[int, add] # Adds to running total# Appendmessages: Annotated[list, add_messages] # Appends to history# Customurls: Annotated[list, lambda old, new: list(set(old + new))] # DedupesGetting reducers wrong causes subtle bugs. Two nodes both update messages? Without add_messages, only the last one's messages survive.

Production Patterns That Actually Work

After building several production agents, here are patterns that saved me:

Pattern 1: Retry with Backoff

Don't just retry immediately. Use exponential backoff:

def agent_with_backoff(state): if state["last_attempt"]: wait_time = state["backoff_seconds"] time.sleep(wait_time) try: result = risky_operation() return {"result": result, "backoff_seconds": 1} except Exception: return { "retry_count": state["retry_count"] + 1, "backoff_seconds": min(state["backoff_seconds"] * 2, 60) }def agent_with_backoff(state): if state["last_attempt"]: wait_time = state["backoff_seconds"] time.sleep(wait_time) try: result = risky_operation() return {"result": result, "backoff_seconds": 1} except Exception: return { "retry_count": state["retry_count"] + 1, "backoff_seconds": min(state["backoff_seconds"] * 2, 60) }First retry: wait 1s. Second: 2s. Third: 4s. Prevents hammering rate-limited APIs.

Pattern 2: Error-Type Routing

Different errors need different handling:

def route_error(state): error = state["error_message"] if "rate_limit" in error: return "backoff" # Wait longer elif "auth" in error: return "refresh_credentials" elif "not_found" in error: return "try_fallback" else: return "retry"def route_error(state): error = state["error_message"] if "rate_limit" in error: return "backoff" # Wait longer elif "auth" in error: return "refresh_credentials" elif "not_found" in error: return "try_fallback" else: return "retry"A 404 error needs a different strategy than a rate limit.

Pattern 3: Validation Loops

Build quality in:

def route_validation(state): if validate(state["output"]) and state["retry_count"] < 3: return "success" elif state["retry_count"] >= 3: return "fail" else: return "improve" # Loop back with feedbackdef route_validation(state): if validate(state["output"]) and state["retry_count"] < 3: return "success" elif state["retry_count"] >= 3: return "fail" else: return "improve" # Loop back with feedbackCode doesn't compile? Loop back and fix it. Output quality low? Try again with better context.

Common Pitfalls (And How to Avoid Them)

Pitfall 1: Infinite Loops

Always have an exit condition:

# BAD - loops forever if error persistsdef route(state): if state["error"]: return "retry" return "continue"<div></div># GOOD - circuit breakerdef route(state): if state["retry_count"] >= 5: return "fail" elif state["error"]: return "retry" return "continue"# BAD - loops forever if error persistsdef route(state): if state["error"]: return "retry" return "continue"# GOOD - circuit breakerdef route(state): if state["retry_count"] >= 5: return "fail" elif state["error"]: return "retry" return "continue"Pitfall 2: No Error Handling

Wrap risky operations:

def safe_node(state): try: result = api_call() return {"result": result, "status": "success"} except Exception as e: return { "status": "error", "error_message": str(e), "retry_count": state["retry_count"] + 1 }def safe_node(state): try: result = api_call() return {"result": result, "status": "success"} except Exception as e: return { "status": "error", "error_message": str(e), "retry_count": state["retry_count"] + 1 }One unhandled exception crashes your entire graph.

Pitfall 3: Forgetting Checkpointing

Development without checkpointing is fine. Production without checkpointing is disaster. Always compile with a checkpointer:

# Development app = workflow.compile(checkpointer=MemorySaver())<div></div># Productionapp = workflow.compile( checkpointer=SqliteSaver.from_conn_string("agent.db"))# Development app = workflow.compile(checkpointer=MemorySaver())# Productionapp = workflow.compile( checkpointer=SqliteSaver.from_conn_string("agent.db"))Pitfall 4: Ignoring State Reducers

Default behavior loses data:

# BAD - second node overwrites first node's messagesmessages: list[BaseMessage]<div></div># GOOD - accumulates messages messages: Annotated[list[BaseMessage], add_messages]# BAD - second node overwrites first node's messagesmessages: list[BaseMessage]# GOOD - accumulates messages messages: Annotated[list[BaseMessage], add_messages]Test your reducers. Make sure state updates as expected.

Pitfall 5: State Bloat

Don't store large documents in state:

# BAD - checkpointing writes MBs to diskdocuments: list[str] # Entire documents<div></div># GOOD - store references, fetch on demand document_ids: list[str] # Just IDs# BAD - checkpointing writes MBs to diskdocuments: list[str] # Entire documents# GOOD - store references, fetch on demand document_ids: list[str] # Just IDsKeep state under 100KB for fast checkpointing.

Visualizing Your Graph

LangGraph generates diagrams automatically:

display(Image(app.get_graph().draw_mermaid_png()))display(Image(app.get_graph().draw_mermaid_png()))

Figure: Workflow Visualization

This catches design flaws before you deploy. Missing edge? Unreachable node? You'll see it immediately.

Real-World Performance Numbers

Here's what happened when I moved a research agent from chains to graphs:

Before (chains):

-

Network timeout on step 8 → restart from step 1

-

Cost: $0.50 per failure (7 wasted LLM calls)

-

Debugging time: 2 hours (no observability)

-

Success rate: 60% (failures compounded)

After (LangGraph):

-

Network timeout on step 8 → resume from step 8

-

Cost: $0.05 per retry (1 retried call)

-

Debugging time: 10 minutes (full logs)

-

Success rate: 95% (retries work)

The retry logic alone paid for the migration in a week.

Testing Production Agents

Unit test your nodes:

def test_plan_research(): state = {"research_query": "AI trends"} result = plan_research(state) assert "search_queries" in result assert len(result["search_queries"]) > 0def test_plan_research(): state = {"research_query": "AI trends"} result = plan_research(state) assert "search_queries" in result assert len(result["search_queries"]) > 0Test your routers:

def test_retry_routing(): # Should retry state = {"retry_count": 1, "max_retries": 3} assert route_retry(state) == "retry" # Should give up state = {"retry_count": 3, "max_retries": 3} assert route_retry(state) == "fail"def test_retry_routing(): # Should retry state = {"retry_count": 1, "max_retries": 3} assert route_retry(state) == "retry" # Should give up state = {"retry_count": 3, "max_retries": 3} assert route_retry(state) == "fail"Integration test the full graph:

def test_agent_end_to_end(): result = app.invoke(initial_state, config) assert result["current_stage"] == "complete" assert result["report"] != "" assert result["retry_count"] <= result["max_retries"]def test_agent_end_to_end(): result = app.invoke(initial_state, config) assert result["current_stage"] == "complete" assert result["report"] != "" assert result["retry_count"] <= result["max_retries"]These tests saved me hours of production debugging.

When to Use Graphs vs Chains

Use chains when:

-

Simple sequential workflow

-

No conditional logic needed

-

Single LLM call

-

Prototyping quickly

Use graphs when:

-

Conditional routing required

-

Need retry logic

-

Long-running workflows

-

Production deployment

-

Error handling critical

Rule of thumb: If your agent has more than 3 steps or any branching, use a graph.

Getting Started: Complete Working Example

I've packaged everything into a downloadable project:

GitHub: LangGraph Research Agent

The repo includes:

-

Complete source code

-

3 working examples (basic, streaming, checkpointing)

-

Unit tests

-

Production-ready configuration

-

Comprehensive documentation

Quick start: Read instructions at given github url.

You'll see the agent plan, search, validate, process, and generate a report—with full observability and automatic retries.

Key Takeaways

Building production agents isn't about fancy prompts. It's about engineering reliability into the system:

-

Explicit state makes agents debuggable

-

Conditional routing handles real-world complexity

-

Checkpointing prevents wasted work

-

Retry logic turns transient failures into eventual success

-

Observability shows you exactly what happened

LangGraph gives you all of these. The learning curve is worth it.

Start with the research agent example. Modify it for your use case. Add nodes, adjust routing, customize state. The patterns scale from 3-node prototypes to 20-node production systems.

What's Next

This covers deterministic workflows—agents that follow explicit paths. The next step is self-correction: agents that reason about their own execution and fix mistakes.

That's Plan → Execute → Reflect → Refine loops, which we'll cover in Module 4.

But master graphs first. You can't build agents that improve themselves if you can't build agents that execute reliably.

Resources

Official Documentation:

Code Examples:

About This Series

This post is part of Building Real-World Agentic AI Systems with LangGraph, a comprehensive guide to production-ready AI agents. The series covers:

-

Module 3: Deterministic Agent Flow (This Post)

-

Module 4: Planning & Self-Correction

-

Module 5: Multi-Agent Systems

-

And more...

Building agents that actually work in production is hard. But with the right patterns, it's definitely achievable. LangGraph gives you those patterns.

Now go build something real.